In a nutshell:

- Data leakage is a critical issue that can sabotage predictive models by allowing outside information to influence the model-building process.

- This can lead to misleadingly high accuracy rates on paper, but the model will likely perform poorly in real-world scenarios.

- An example of data leakage is including information in the dataset that would not be available when predictions are made.

- To prevent data leakage, meticulously document the creation and update dates of all variables and exclude any that could provide future information.

- By being vigilant about the timeline of your data, you can build accurate and trustworthy predictive models.

Zohar explains the issue of data leakage in the video above — or read on for more!

Have you ever wondered why your seemingly perfect predictive model fails miserably in the real world? You're not alone. Today, we're diving into a critical issue that plagues many data scientists and machine learning practitioners: data leakage.

This silent killer of predictive models can turn your hard work into nothing more than a glorified random number generator. So, what exactly is data leakage, and how can you protect your models from its insidious effects?

The Sneaky Culprit: What is Data Leakage?

Data leakage is a deceptive problem that occurs when information from outside the training dataset sneaks its way into the model-building process. In simpler terms, it's when your model gains access to data that wouldn't be available at the time of making a real-world prediction. This might sound harmless, but it's far from it.

Imagine you're a detective trying to solve a crime, but someone accidentally gives you information from the future. Sure, you'd solve the case quickly, but your methods wouldn't work for any other investigations. That's essentially what happens with data leakage – your model becomes a cheater, using information it shouldn't have access to.

The Dangers of Data Leakage: Why Should You Care?

Here's where things get scary. When data leakage occurs, your model can appear to perform brilliantly on paper. You might see accuracy rates that make you want to celebrate. But don't be fooled – this is a mirage.

In reality, when you deploy this model in a production environment, it's likely to perform no better than if you were flipping a coin. Why? Because in the real world, that leaked data isn't available when making predictions. Your model has learned to rely on information from the future, which doesn't exist when it's time to make actual predictions.

This false sense of security can lead to disastrous consequences. Imagine making critical business decisions based on a model that's essentially guessing. The financial and reputational damage could be severe.

Real-World Example: The Deposit Dilemma

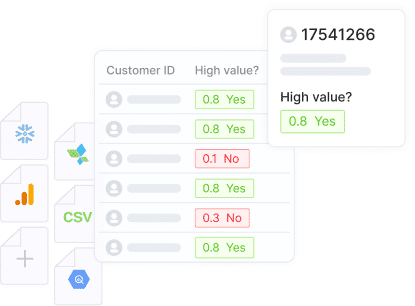

Let's break this down with a practical example. Say you're trying to predict whether a customer will make their first deposit on a financial platform. You gather historical data about customer behavior, website visits, demographics, and so on. So far, so good.

But here's the trap: you include a field in your dataset that shows whether the customer has ever withdrawn money. At first glance, this might seem like useful information. However, think about it – a customer can only withdraw money if they've already made a deposit.

By including this information, you've inadvertently given your model knowledge of the future. Whenever it sees a "Yes" in the withdrawal field, it knows with 100% certainty that this customer will make a deposit. In the real world, when you're trying to predict future deposits, you won't have this withdrawal information available.

Preventing Data Leakage: Practical Tips

So, how do you protect your models from this sneaky problem? The key is vigilance and documentation. Here's a golden rule: ensure that every field in your dataset has a clear and documented creation and update date.

Before including any variable in your model, ask yourself: "Would this information be available at the time I'm making the prediction?" If the answer is no, or if you're unsure, it's best to err on the side of caution and exclude the variable.

Pay special attention to fields that might be updated after the event you're trying to predict. These are often the culprits in data leakage scenarios.

By being meticulous about the timeline of your data, you can build accurate and trustworthy models, providing predictions you can use every day to make better-informed business decisions.

Learn more about how Pecan's predictive analytics platform can help you build predictive models faster and more easily than ever. Try a free trial or get a guided tour today.