In a nutshell:

- Advanced predictive analytics are in high demand for unlocking deeper insights from data.

- Machine learning algorithms like decision trees and SVMs form the foundation of predictive analytics.

- Ensemble learning methods like bagging and boosting improve prediction accuracy.

- Advanced statistical techniques like Bayesian inference and time series analysis enhance predictive modeling.

- Deep learning techniques such as neural networks and LSTM networks are essential for processing complex data patterns.

In advanced predictive analytics, machine learning opens up a whole new world of foresight, where accurate forecasting is more science than guesswork. These cutting-edge techniques blend the latest algorithms into powerful predictive recipes that can anticipate everything from consumer demands to market dynamics to operational shifts with pinpoint precision.

Like a master chef continuously tweaking and refining their signature dishes based on diner feedback, machine learning allows these predictive models to update and improve their formulas as new data streams in. The algorithms learn and evolve, ingesting new information to cook up visions of upcoming conditions and trends with incredible accuracy.

The demand for the delicious predictions offered by advanced predictive analytics has never been higher. Data analysts continually seek ways to go beyond traditional methods and unlock deeper insights from their data. By staying abreast of the latest advancements in predictive analytics, data analysts can deliver maximum value to businesses needing sophisticated forecasting and decision support.

To that end, we’re serving up the details on advanced predictive analytics and assessing the techniques, such as machine learning algorithms, ensemble modeling, deep learning, and advanced statistical techniques. Let's dig in.

Photo by and machines on Unsplash

Machine Learning Algorithms

Advancements in the field of predictive analytics would not be possible without machine learning algorithms. These pieces of code are capable of learning from data input and then utilizing this learning to solve complex problems, making predictions or decisions without being explicitly programmed to perform the task.

We'll explore two types of machine learning algorithms here: basic and ensemble. Different projects are better suited for each, and each involves different strategies.

Basic Machine Learning Algorithms

Basic machine learning algorithms form the bedrock of predictive analytics. These algorithms, while often quite simple in their design and execution, learn from existing data and generate predictions that assist businesses in making data-driven decisions.

They are called "basic" not because of being primitive or simplistic, but because they form the foundational tools in a data analyst's toolkit. They are often the first step in analyzing data, given their ability to handle linear data and the ease of interpreting their results. Some of the ways they do this include:

Decision Trees: Decision trees are simple yet powerful algorithms used for both regression and classification tasks. They use a tree-like model of decisions based on the input features. Each node in the tree represents a feature, each branch represents a decision rule, and each leaf represents an outcome. While they are easy to understand and interpret, decision trees can often lead to overfitting of the data, meaning they may not perform well on unseen data.

Support Vector Machines: Support Vector Machines (SVMs) are used primarily for classification problems, although they can also be used for regression. They plot each data item in n-dimensional space (where n is the number of features) and then find the hyperplane (a subspace of one dimension less than its ambient space) that best separates the different classes. SVMs offer good accuracy and perform well on high-dimensional data.

Ensemble Learning Methods

Where basic machine learning models often fall short, ensemble learning methods have proven to be of further assistance. Ensemble methods combine multiple learning models to increase the overall accuracy and robustness of predictions.

The key idea is that combining the predictions of several models can often yield better results than any single model could achieve.

This might seem counterintuitive. After all, isn't the point of machine learning to create the best possible model for a given problem? While that's true in theory, no model is perfect in practice. Each has its strengths and weaknesses, and these strengths and weaknesses are often complementary.

Let's take a closer look at some of the techniques that make up ensemble learning methods and how they compensate for each other's shortcomings while amplifying their best qualities.

Bagging and Boosting: Bagging and boosting are two popular ensemble methods. Bagging, short for bootstrap aggregating, reduces prediction variance by aggregating the results of multiple classifiers. Conversely, boosting builds strong classifiers from weak ones by focusing on the data points that are hard to predict. Both methods can lead to improved accuracy and avoid overfitting.

Photo by Google DeepMind on Unsplash

Random Forest and Gradient Boosting Machines: Random Forest and Gradient Boosting Machines (GBMs) are two of the most popular ensemble learning methods. Random Forest is an ensemble of decision trees, often trained with the bagging method. It combines hundreds or even thousands of decision trees, leading to more stable and robust predictions.

GBMs, on the other hand, use boosting to create a strong predictive model. They train many models in a gradual, additive, and sequential manner, with each new model correcting the errors of its predecessor, leading to high-quality predictions.

Advanced Statistical Techniques in Predictive Analytics

Embracing advanced predictive analytics also means leaning heavily on advanced statistical techniques like Bayesian inference and time series analysis. These techniques provide the framework needed to make accurate predictions, extract valuable insights, and analyze patterns and trends over time.

If you’re new to all of this, though, you might not know what all of that means. Let’s explore those concepts in more depth.

Bayesian Inference and Probabilistic Modeling

Bayesian inference is a method of statistical inference that updates the probability of a hypothesis as more evidence or information becomes available. Through Bayesian inference, predictive models can adjust their predictions as they receive new data, improving the accuracy of future predictions. Probabilistic modeling, a critical part of Bayesian Inference, uses the laws of probability to predict a specific outcome.

In the context of predictive analytics, Bayesian inference and probabilistic modeling offer unique benefits. By incorporating prior knowledge and evidence, Bayesian inference allows for more nuanced predictions, taking into account uncertainty and varying conditions. It provides a mathematical framework for updating our beliefs as fresh data arrives, enabling more responsive and refined predictive modeling.

Moreover, probabilistic modeling contributes to the robustness and reliability of such predictions. It empowers analysts to quantify uncertainty and measure the likelihood of various outcomes rather than making absolute predictions.

This is a game-changing feature in high-stakes business environments where risk assessment is as important as forecasting itself. In industries like finance, healthcare, or meteorology, the ability to express predictions in terms of probabilities can be incredibly valuable.

Both Bayesian inference and probabilistic modeling align with the core tenets of advanced predictive analytics: they embrace complexity, learn from new evidence, and quantify uncertainty. By integrating these advanced statistical techniques into their toolkits, data analysts can provide more nuanced, adaptable, and actionable insights.

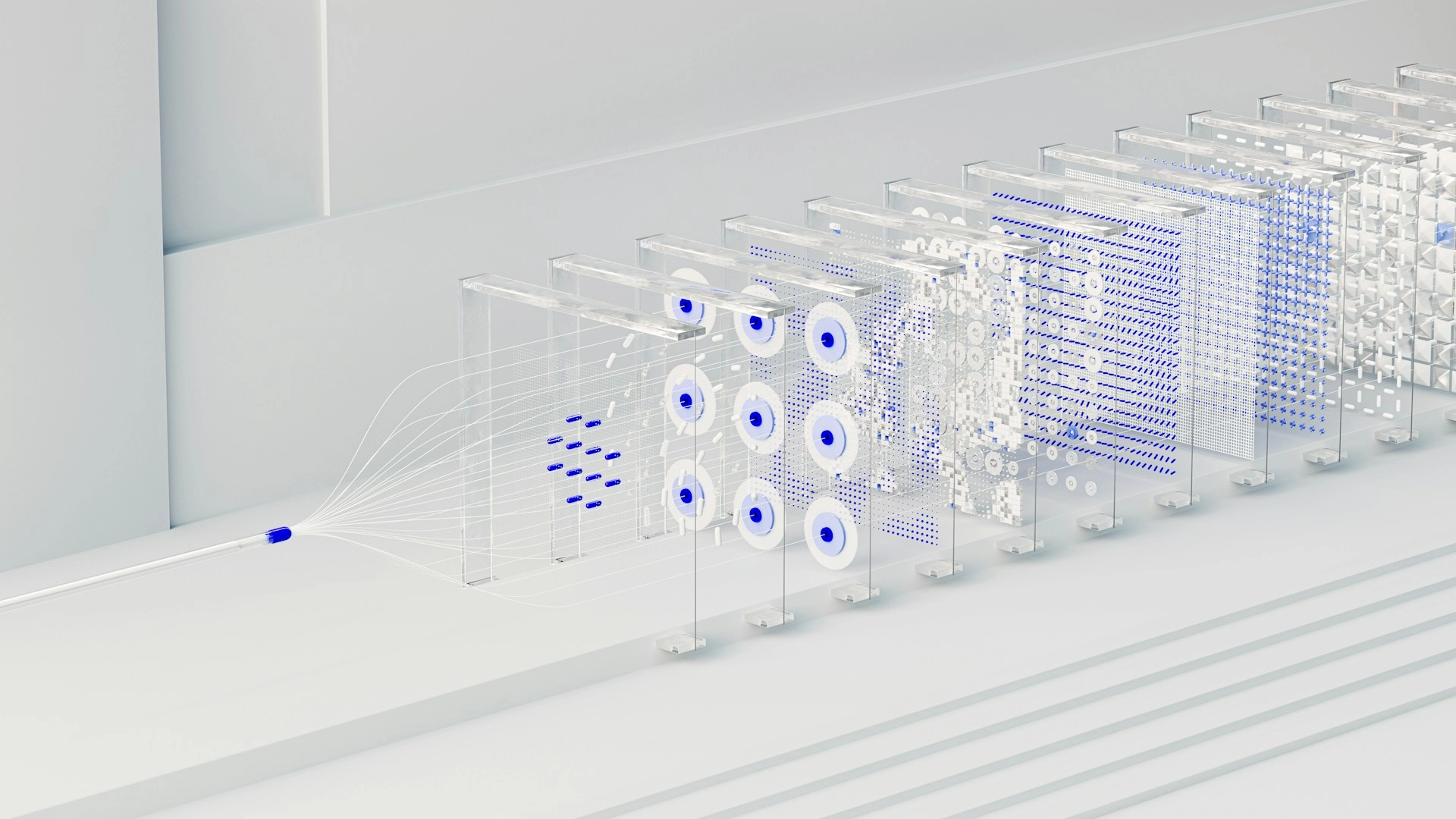

Photo by Google DeepMind on Unsplash

Time Series Analysis and Forecasting

Time series analysis is a big part of predictive analytics related to data collected over a period of time. This statistical technique identifies patterns or trends and uses them to predict future values. Time series forecasting is commonly used in stock market analysis, economic forecasting, and sales forecasting.

One of the most significant aspects of time series analysis is its ability to deal with data points collected at different intervals, whether daily, monthly, or yearly. By understanding the underlying patterns or trends in these data sets, analysts can predict future events (otherwise known as forecasting) with higher accuracy.

The stock market is an excellent example of this framework, as it relies heavily on time series analysis, taking account of historical data on prices, volumes, and other factors to forecast future trends. Similarly, sales forecasting in businesses uses past sales records and varying conditions like holidays, discounts, and economic factors to create accurate sales forecasts.

Several techniques can be used in time series analysis, including moving averages, exponential smoothing, and autoregressive integrated moving average (ARIMA) models. Each method has its strengths and weaknesses, and the choice of model can significantly influence the accuracy of the predictions.

One potential hiccup when using time series analysis is the presence of 'seasonality' or periodical variations. For instance, retail sales usually increase during the holiday season or during specific promotional periods. Recognizing, understanding, and adjusting for such seasonal trends can make predictions more accurate and reliable.

Deep Learning Techniques

Deep learning techniques are another essential aspect of advanced predictive analytics. They can process large amounts of data and identify complex patterns and correlations that might be missed by other methods, making them a valuable tool for all kinds of analysis.

There are a number of deep learning techniques you should know about, with some of the most useful being:

Neural Networks

Neural networks are a set of algorithms designed to recognize patterns. They interpret sensory data through a kind of machine perception, labeling, or clustering of raw input. The patterns they recognize are numerical, contained in vectors, into which all real-world data—images, sound, text, or time series—must be translated.

Neural networks are structured to mimic the architecture of the human brain, hence their name. They feature interconnected layers of nodes or "neurons" that transmit and process information. They take multiple inputs, apply complex calculations, and output a prediction or decision.

Each node assigns a weight to its input—how correct or incorrect it is—based on the task's outcome. It then uses this feedback to adjust its weights, leading to improved performance over time.

This iterative process of adjusting and learning allows neural networks to adapt to changing inputs and conditions, making them highly versatile in their applications. From image recognition and sentiment analysis to forecasting and anomaly detection, neural networks have become a cornerstone of advanced predictive analytics.

Photo by Google DeepMind on Unsplash

Convolutional Neural Networks (CNN)

CNNs are a type of deep neural network mainly used to process visual imagery. They are designed to take in an input image, assign importance to various aspects of the image, and differentiate one from the other.

Recurrent Neural Networks (RNN)

Unlike traditional neural networks, RNNs are designed with temporal data in mind. They can process sequences of data points, such as time series or natural language, which makes them ideal for tasks like speech recognition, language modeling, and translation. RNNs achieve this by incorporating loops in the network's architecture, allowing information to be passed from one step in the sequence to another.

However, RNNs have limitations when dealing with long sequences, which is where Long Short-Term Memory units (LSTMs) come into play.

Long-Short Term Memory Networks (LSTM)

LSTMs are a special type of RNN designed to avoid the long-term dependency problem commonly associated with regular RNNs. They can remember information for long periods, which is advantageous for many applications, especially ones with longer sequence lengths.

For instance, in language translation, the meaning of a word can depend on the preceding clause or sentence, which could be quite a distance away in terms of sequence length. LSTMs enable the network to keep track of these long-term dependencies, leading to more accurate predictions.

Advanced Model Evaluation and Validation

Crafting models is just one part of the predictive analytics process. Equally important is the evaluation and validation of these models to ensure they can make accurate predictions on unseen data. Some of these techniques include:

Cross-Validation Techniques

Cross-validation techniques assess how a statistical analysis's results generalize to an independent data set. These techniques involve partitioning a sample of data into complementary subsets, performing analysis on one subset, and validating the analysis on the other subset.

Bias-Variance Tradeoff in Advanced Predictive Models

This tradeoff is a common problem in supervised learning. Optimal performance for a model requires a balance between bias, which makes the model too simple, and variance, which overcomplicates the model with too many details. Understanding this tradeoff helps in designing more effective predictive models.

Implementing Advanced Predictive Analytics in Business

Real-world applications of advanced predictive analytics are all around us. However, turning these advanced techniques into actionable strategies often comes with its own set of challenges.

Real-World Applications of Advanced Predictive Analytics

Predictive analytics has a wide range of applications, from predicting customer churn, forecasting sales, and assessing risk to improving operational efficiency.

Photo by Google DeepMind on Unsplash

Overcoming Challenges in Deploying Advanced Predictive Models

Despite their potential, implementing advanced predictive models can be challenging. It often requires a significant investment in technology and personnel, and the complexity of the models can make them hard to understand and interpret. To overcome these, businesses can leverage low-code, automated predictive analytics platforms for implementing advanced predictive analytics.

Future Trends in Advanced Predictive Analytics

As predictive analytics continues to revolutionize various fields, staying abreast of its advancements unlocks a competitive advantage. The future is bright for predictive analytics, from AI advancements that make predictive models more accurate to new data sources like IoT devices that provide even more information. As predictive analytics becomes more advanced and pervasive, however, ethical and regulatory considerations are increasingly prominent. Issues around data privacy and potential bias in machine learning models are at the forefront of discussions.

Take Your Work to the Next Level With Advanced Predictive Analytics

Exploring advanced predictive analytics opens up a world of possibilities. These sophisticated techniques allow us to glean deeper insights, make more accurate predictions, and ultimately deliver more value to businesses.

Mastering these tools and techniques empowers data analysts to navigate the increasingly complex data landscape and drive competitive advantage for their organizations. The future of predictive analytics is not just about anticipating what's next – it's about shaping it.

Ready to shape your business's future? Try Pecan now with a free trial and build your first machine-learning model today. Or, if you'd like to chat, get in touch with us.