In a nutshell:

- Data validation is crucial for AI adoption, ensuring high-quality inputs for models.

- Noise in AI refers to irrelevant or meaningless data that can distort results.

- Traditional validation methods like manual checks and double-entry verification fall short in today's data landscape.

- Automated methods like rule-based validation, machine learning, and data quality tools are revolutionizing data validation.

- Establishing a data validation framework, balancing speed and accuracy, and integrating with AI systems are key best practices for successful data validation.

There's a saying meant to encourage people who don't like cleaning so much: "Clean badly and often." The idea is, of course, that if you take a casual swipe at some dust every day, you can accomplish the same outcome as you would in one house-wide dusting session. Unfortunately, this motivational adage doesn't work for data validation. You need to clean well, and often — or there will be consequences.

Data validation is a critical component of any data initiative, especially in the realm of AI adoption. Efficiently and correctly validating data is vital for data analytics managers, directors, and chief data officers, who want to ensure high-quality inputs for AI models.

Data validation is especially critical when so much of an organization's revenue depends on the accurate operation of various data-driven processes.

In a recent survey, over half of respondents reported that 25% or more of their organization's revenue would be impacted by bad data. In short, bad data will affect more businesses more severely today as data and AI tools proliferate.

Let's make sure your data is tidy and ready for the hard work it performs in your business. We'll dive into potential data problems, the shortcomings of traditional validation methods, and the growing variety of efficient, AI-powered options. We'll also explore how and why you'll need to prioritize this concern to fuel successful AI initiatives.

-

- Photo by Agence Olloweb on Unsplash

What is Noise in AI?

Noise in the context of artificial intelligence refers to irrelevant or meaningless data or random fluctuations in the processed data. It can cause significant distortion and negatively impact the performance of AI and machine learning models.

In the world of AI, noise can come in various forms, such as incorrect data entries, missing values, outliers, or even irrelevant features in the data set. Noise can lead to inaccurate results and misleading data relationships — and ultimately, poor decisions based on these insights.

For instance, in an AI model designed to predict customer behavior, noise could be information about the weather on the day of data capture. That feature might not be relevant to the customer's purchasing patterns. This irrelevant data is noise. (However, it could be relevant to some models — umbrella sales, anyone? — so this is context-dependent.)

Similarly, if an AI model processes data with incorrect or random values due to a faulty sensor or human error during data entry, its predictions may be misleading or entirely incorrect.

But it's still possible to get clarity. Data validation methods mitigate the impact of the noise. These methods ensure the accuracy and relevance of data, consequently enhancing the performance of AI models.

Traditional Data Validation Methods

Let's take a moment to appreciate the past — and be glad we've moved on from its data validation methods.

Before the advent of automation and machine learning, data validation was largely a manual process. The most common traditional methods were manual and time-consuming, but they laid the groundwork for today's advanced techniques.

Manual Data Entry Checks

For many years, manual data entry checks were the primary method of validating data. This process involved human operators checking data for errors such as inconsistencies, duplicates, and inaccuracies. Despite being prone to human error, this method was effective at catching glaring mistakes and ensuring a basic level of data quality.

Double-Entry Verification

Humans' manual data entry will never be perfect. One attempt to improve our work was double-entry verification. Two operators would independently input the same data, and they would flag any discrepancies between their entries for review. This method significantly increased the accuracy of data, but it was labor-intensive and time-consuming.

These traditional data validation methods served their purpose. However, with the sheer volume and complexity of data now being processed, these methods alone are insufficient (not to mention the vast amounts of boredom and fatigue they would produce). We're fortunate to live in a time with more efficient and reliable methods of data validation.

-

- Photo by National Cancer Institute on Unsplash

Traditional Data Validation Falls Short of Today’s Needs

As we wade further into the era of AI and machine learning, the complexity of data increases exponentially. Multidimensional data — which includes a variety of data types such as structured, semi-structured, and unstructured data — poses new challenges.

Structured Data

Structured data is data that is organized and formatted in a consistent way, often in tabular form, such as in a spreadsheet or a database. It includes data that has a clear, predefined, and logically organized structure. This type of data is easy to search, organize, and analyze as it adheres to a specific model or schema.

For example, a customer database with columns for name, address, and purchase history is structured data.

Structured data can be fairly straightforward to manage, and it's critical to the kinds of machine-learning projects that deliver significant business value.

Unstructured Data

Here's where things get even messier. Unstructured data refers to information that lacks a predefined data model or organization. It often exists in formats like text documents, images, videos, or social media posts.

Unlike structured data found in databases or spreadsheets, unstructured data doesn't fit neatly into rows and columns, making it challenging to analyze using traditional methods. The complexity of unstructured data lies in its varied sources, formats, and content, requiring specialized techniques to extract meaningful insights.

Validating unstructured data is tough for a few reasons. Without a uniform structure, it's hard to establish consistent validation criteria across different types of data. Frequently, this kind of data includes noise, irrelevant information, or ambiguities that can skew validation results.

A final hurdle is that unstructured data lacks context. Advanced natural language processing and machine learning algorithms are required to even attempt to accurately assess its validity.

If you're facing the task of validating unstructured data, you'll need innovative approaches and tools tailored to its unique characteristics. Only then can you trust in its reliability and usefulness in decision-making processes.

Semi-Structured Data

And then there's the middle ground: Semi-structured data is a hybrid between structured and unstructured data. It doesn't conform to the rigorous structure of data models associated with relational databases or other forms of structured data. However, it does contain tags, markers, or other structural elements that mean you can easily locate, identify, and sort key pieces of data.

For example, an email is a type of semi-structured data. It has structured elements such as the sender, recipient, date, and subject line, but also unstructured elements like the text in the body of the email.

As you can imagine, there are distinctive challenges for data validation with semi-structured data due to its inconsistency. But it also provides opportunities for more flexible and dynamic data interactions. This flexibility can be extremely valuable in AI applications, where fusing diverse types of data (as in multimodal AI) can generate more accurate models and predictions.

-

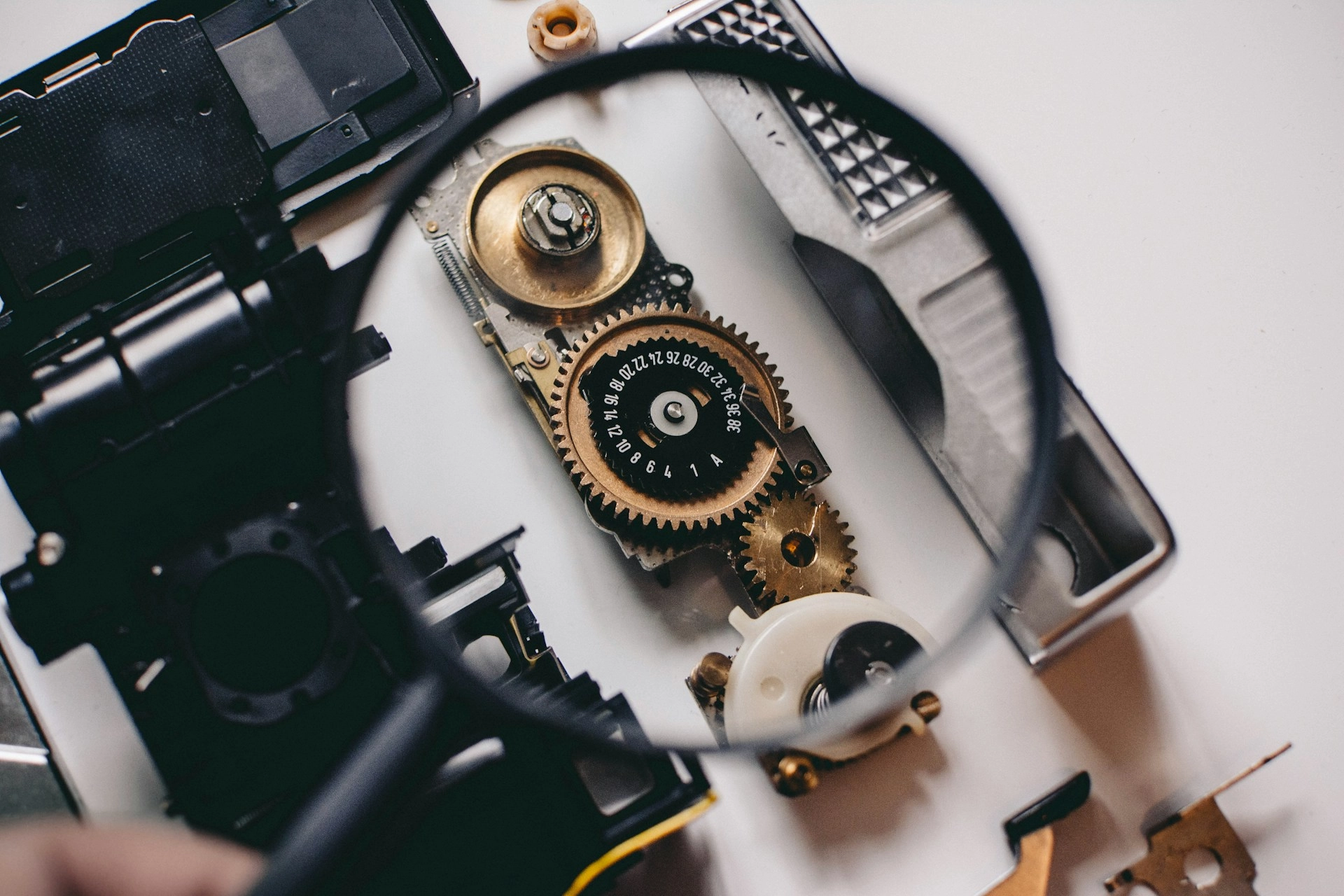

- Photo by Markus Winkler on Unsplash

The Evolving Need for Data Validation in a World of AI

The surge in data generation has resulted in the accumulation of colossal volumes of data. Unfortunately, large datasets are more likely to contain noisy or irrelevant data, making it even more essential to establish robust data validation processes.

This is especially true if you're planning to use this large quantity of data to fuel an AI model. The accuracy and relevance of the input data can make a significant difference in the model's performance and effectiveness.

As a result, the evolution of data validation methods is no longer optional but essential in the context of AI.

Traditional data validation methods are not obsolete, and they should not be completely written off. They serve as a foundation for understanding the basic principles of data validation and can be a foundation for new approaches. Even as we move towards automated methods, there may still be situations where traditional methods are more suitable or offer a level of granularity that automated methods cannot replicate. That being said, the growth and complexity of data in today's digital world demand more sophisticated and efficient methods.

Thankfully, this does not mean deploying hordes of humans to do double-entry validation. Instead, we're witnessing the rise of automated data validation methods, which can handle larger volumes of data more efficiently and accurately.

Automated Data Validation Methods

Automation is not only reducing manual labor but also drastically improving data accuracy.

The three most prominent automated data validation methods are rule-based validation, machine learning-based validation, and the use of data quality tools.

Rule-Based Data Validation

Rule-based data validation is a process wherein certain criteria or 'rules' are established for the data. If the data doesn't comply with these rules, it's flagged and reviewed. For instance, a rule might specify that a certain field must contain a numeric value or that there should be no null values in a specific column. You may have used a version of this in your favorite spreadsheet app.

This method significantly reduces the time and resources spent on data validation and ensures a higher level of accuracy compared to manual methods. It can catch complex discrepancies and inconsistencies that human validators might overlook.

Machine Learning-Based Data Validation

While rules are an improvement, what if AI could take over some of the work? Machine learning-based data validation is transforming traditional data validation techniques by using advanced algorithms to automate the process.

Here's what that looks like: training a model on a labeled dataset, allowing it to learn the patterns of valid and invalid data points, then predicting the validity of new data points. This approach provides real-time validation without the need for manual intervention.

This sophisticated method is particularly good at handling large datasets, where manual validation processes may be time-consuming and prone to errors.

Whether it's identifying outliers, flagging inconsistencies, or verifying data accuracy, machine learning-based data validation offers a robust solution for ensuring the quality of datasets.

Data Quality Tools

The emergence of specialized data quality tools has taken automated data validation to a new level. These tools come with built-in algorithms and models that can identify errors, gaps, and inconsistencies in the data. They can handle vast volumes of data with high speed and precision.

Prominent data quality tools offer features like data profiling, data cleansing, and real-time validation. They enhance the overall efficiency of data validation and allow for more comprehensive error detection.

Automated data validation methods are a significant leap from traditional methods. They allow for handling large datasets without compromising on accuracy or speed.

However, today's AI and ML initiatives mean that a firm grasp of these methods is not just a luxury. It's a necessity.

The Importance of Data Validation in AI Adoption

The goal of artificial intelligence is to make machine processes mimic human decision-making. To achieve this, AI models rely heavily on data. The quality of the data fed into these models can significantly impact their performance and reliability.

Two key ways that data validation influences AI adoption are by ensuring quality input for AI models and mitigating bias and errors in AI outputs.

Ensuring Quality Input for AI Models

In the world of AI, the saying "garbage in, garbage out" holds absolute truth. An AI model is only as good as the data that feeds it. If the data is inaccurate, incomplete, or irrelevant, the insights generated by the AI model will also be flawed.

Effective data validation ensures the data is accurate, complete, and relevant. When rigorously applying data validation methods, especially automated ones like rule-based validation or data quality tools, the chance of feeding poor-quality data into the AI models is drastically reduced. As a result, the models are able to generate more reliable and valuable insights.

Mitigating Bias and Errors in AI Outputs

Data validation plays a significant role in mitigating bias and errors in AI outputs. Validation techniques help identify and eliminate inaccuracies, inconsistencies, and biases in the data, thereby mitigating the risk of AI perpetuating these issues.

For instance, if an AI model is trained on a dataset that lacks diversity, the outputs from the AI model may be biased. With thorough data validation, such biases can be identified and corrected before they impact the AI model's performance.

AI shapes more and more decisions today. That means that ensuring the accuracy and fairness of AI predictions through data validation becomes increasingly important.

-

- Photo by Shane Aldendorff on Unsplash

Best Practices for Data Validation

Ready to do more than just think about data validation? Implementation requires a thoughtful approach that incorporates accepted best practices. Two keys are establishing a data validation framework and integrating data validation methods into AI systems.

Data Validation Frameworks

First, you'll need to formulate a coherent data validation framework for your robust data validation process. This framework outlines the key criteria and rules that the data must conform to, establishes a transparent validation process, and defines the metrics for data quality.

Having a solid framework ensures that all data undergoes the same level of scrutiny, irrespective of its source or destination. This uniformity helps maintain data consistency and integrity across different systems and platforms.

There are several comprehensive frameworks available, such as the DAMA DMBOK (Data Management Body of Knowledge) or the MITIQ (Information Quality) framework. These provide guidelines for managing and ensuring the quality of data.

Balancing Speed and Accuracy in Data Validation

There's a lot of pressure to expedite data and AI initiatives today and show ROI. However, in the pursuit of speed and agility, organizations still must ensure data accuracy. Inaccurate data undermines the reliability of predictions and models, posing significant risks to informed decision-making processes.

To be sure, there's a delicate balance between speed and accuracy. But prioritizing data validation is essential to safeguard the integrity of your predictive analytics endeavors.

Integration with AI Systems

Integrating data validation methods into AI systems is the next crucial step. By embedding automated data validation methods within your data and AI tools and platforms, data can be validated rapidly, ensuring ongoing quality checks.

Furthermore, evolving AI models can accommodate more complex and specific data validation rules, helping to enhance data quality. Integrating data validation with AI platforms can reduce error rates and improve data handling efficiency.

These best practices in your data validation process will not only ensure that your data is of high quality but will also enable your AI models to deliver more accurate and reliable results.

The Future of Data Validation Methods

The future of data validation is evolving rapidly, driven by innovative trends and technologies that promise to revolutionize traditional validation methods. Emerging approaches such as automated validation powered by artificial intelligence and machine learning algorithms are reshaping how organizations ensure data accuracy and integrity.

Additionally, the integration of data validation into cloud-based platforms offers scalable and efficient solutions for managing validation processes across diverse datasets and systems.

Another potential innovation: blockchain technology can support data integrity by adding an extra layer of security and transparency to the validation process, enhancing trust in data sources.

Data Validation and Quality AI Output

Situated at the core of efficient and effective data management, data validation is an indispensable process for guaranteeing the quality of your data and, consequently, the output of your AI models.

While our journey through data validation methods began with manual techniques such as manual data entry checks and double entry verification, the field has seen significant advancements. The shift toward automated tools such as rule-based validation, machine-learning approaches, and data quality tools has been instrumental in handling the burgeoning volumes of data.

Data validation is a vital process that ensures the integrity and reliability of the data that fuels AI. The right data validation methods will provide the right signals to your AI models and, in turn, lead to more accurate, reliable, and actionable insights. Through proper validation, we say goodbye to noise and hello to the full potential of AI.

Ready to explore how your data validation methods can be the backbone of a successful AI initiative? Get in touch to chat about how Pecan can support your AI projects — and don't forget to ask about our automated data preparation.