If it ain’t broke, don’t fix it: This adage explains why many businesses still resist implementing machine learning (ML) and predictive modeling and, instead, rely solely on descriptive analytics (patterns and trends) of historical data.

Of course, many farmers once felt the same about threshing machines and cotton gins — until they saw how their neighbors were improving their yields and reducing waste by orders of magnitude.

By the same token, numerous organizations have done just fine using descriptive analytics to uncover what happened in the past and get some clues into what future sales, churn, demand, etc. might look like. But machine learning has created more powerful ways to use historical days, things like predictive modeling and prescriptive analytics. These ML-powered workflows still rely on historical data, but instead, they use the power of machine learning to spot patterns among millions of data points — and to do so in a flash — enabling marketing teams to yield more accurate and actionable insights.

The global predictive analytics market is projected to more than double from $17.42 billion in 2024 to $41.44 billion in 2028. And 42% of companies using AI and ML for improved decision-making said the profitability of their initiatives exceeded expectations, while just 1% reported they fell short.

Businesses that managed to survive and even thrive in the pre-ML era might wonder why they need to bother with predictive modeling. The biggest reason? It enables organizations, especially marketing teams, to make informed decisions in real time with insights they might never have come by otherwise. In this blog, we’ll explain 10 of the most common machine learning models and how they can be used in your marketing efforts.

10 of the most common ML models

To reap the rewards of ML predictive modeling, it helps to know exactly what it can do. The short answer: a lot. Fraud detection and prevention, customer segmentation, campaign optimization, pricing, and demand planning are just a few of its myriad purposes.

But not every predictive model can achieve every goal. A model that can pinpoint which customers are likely to lapse can’t necessarily determine which advertising channels will be most effective for reaching certain customer segments.

Below, we’ll look at 10 commonly used ML models and what they’re best used for.

Linear regression model

This is one of the most frequently used models — in part because it’s one of the simplest. It uses one or more known, or independent, variables to predict a related but unknown, or dependent, variable.

What’s critical to remember is that the dependent and independent variables need to have a direct correlation. Using consumers’ eye color to predict their likelihood to purchase high-end coffee would not be productive, as there’s no correlation between eye color and the consumption of premium coffee.

Linear regression models often use historical or trend data to predict future values. For instance, a retailer could use sales data from previous holiday seasons to gauge how much seasonal merchandise to stock for the upcoming holiday season or determine how to price new products based on the selling prices of similar SKUs.

Common use cases: sales forecasting, customer lifetime value (CLV), pricing optimization, demand forecasting, budget allocation

Logistic regression

Like linear regression, logistic regression uses one or more independent variables to predict a dependent variable. But the output of a linear regression model will be continuous values, such as how many more people will purchase a product for each additional $10,000 of advertising spend. The predictions of logistic regression models, on the other hand, are binary and used to classify likely outcomes. For example, this could identify if a customer will churn, (Yes/No), or identify which marketing and sales leads to prioritize, e.g., “Will this lead convert?”

Common use cases: customer churn prediction, customer segmentation and lead prioritization, email campaign effectiveness, and click-through rate (CTR) predictions of digital ads

Support vector machine (SVM)

While support vector machines can output continuous values, they’re most frequently used for classification, à la logistic regression models. Because logistic regression models are typically easier to implement, train, and interpret than SVMs, you might wonder why one would bother with the latter. A major reason: SVMs can handle more complex, or nonlinear, relationships than logistic regression models.

For instance, a logistic regression model might assess whether an email is spam based on whether it includes the word free in the subject line: if the word appears, the email is classified as spam. A support vector machine might also include the relationship between free in the subject line and other phrases in the text. SVMs are also less likely to be overfitted and more apt to minimize the effect of outliers.

Common use cases: customer segmentation, fraud detection, ad targeting, product recommendations

Autoregressive integrated moving average (ARIMA)

An ARIMA model uses data collected over a period of time to forecast future demand, prices, and other numerical values. Just as a support vector machine could be considered the brainy sibling of a logistic regression model, ARIMA is a more sophisticated, robust cousin of linear regression. Time, particularly sequentiality, is an important feature in an ARIMA model; it assumes that predictions are the result of a process over time and uses previous sequences to forecast future ones. While a linear regression model might predict a business’s upcoming December sales based on its sales the previous December, an ARIMA model would also take into consideration sales during the months in between.

Common use cases: sales forecasting, campaign performance predictions, inventory planning

Decision tree

This model’s name reflects how its output looks when charted: like a tree with branches that divide into more branches. The root node is the entire data set. The data is then divided into decision nodes — the branches — each of which may be further divided depending on the data and the problem to be solved. Because they can be used for both regression and classification tasks and because they can handle numerous variables or features, decision trees are highly flexible. And while their description might sound complicated, they’re actually used for breaking down complex data into simpler elements.

Say you want to determine which customers in a data set are most likely to resubscribe to your service. You have myriad features for each: age, lifetime value, level of engagement, household income, and gender. A decision tree model might first divide them into branches for gender. Then each gender branch might be split into more branches based on age group. Each age group by gender branch might then be split by lifetime value, and so on, until the model can predict which segments of the data set are most and least apt to re-subscribe.

Common use cases: lead scoring, upselling, ad placement, customer retention strategies

Random forest

For all their benefits, decision tree models are prone to overfitting and bias. A random forest model compensates for that by incorporating the output of multiple decision trees to create a final output. Taking the previous example of resubscription likelihood, one decision tree might give household income more weight in making its prediction, while another might weigh the level of engagement more heavily, and a third might weigh them equally. The random forest takes all these into consideration when making its predictions, which, like those of decision trees themselves, can be continuous values or classifications.

Common use cases: customer churn, cross-selling, campaign performance prediction, customer segmentation

Gradient boosting machine (GBM)

Like random forests, gradient boosting machines help improve the effectiveness of decision tree models. But while a random forest aggregates multiple decision trees, a GBM creates one tree at a time, designing each subsequent tree to correct the errors of the previous one, calculating the differences between the predictions and the actual values until it has achieved a highly reliable model. Because it is effective in mitigating the effects of outliers and imbalanced classes, GBMs are often used when accuracy and precision are especially important, such as healthcare and financial assessments. That said, it can also predict customer churn, purchasing behavior, and myriad other marketing use cases.

Common use cases: ad spend optimization, sales forecasting, personalized marketing

K-nearest neighbors (KNN)

You’ve probably come across outputs from models using k-nearest neighbor algorithms multiple times a week. They help drive recommendation engines by classifying new data based on how similar (or near) its features are to those of existing data. K represents the number of nearest neighbors used to make the prediction; if k is set to 5, the new data point is classified based on the five existing data points most similar to it, whereas a k of 1 means the new data will be classified based on just the single closest existing data point. In addition to being used for recommendation engines, KNN is often employed for image recognition tools, where it

compares pixel values of new images to existing ones.

Common use cases: product recommendations, personalized ads

K-means clustering

KNN models give a classification for each new data point. K-means clustering creates groups of data based on their similarity to the centroid, or the center of each cluster. Here k represents the number of centroids to be created, and each centroid is a mathematical mean of chosen data points. The ML model selects the data points for each centroid randomly, then refines the choice of data points repeatedly until it creates clusters of data that are most like each other while also being most dissimilar to the other clusters.

K-means clustering is often used for customer and audience segmentation: Consumers in the cluster most likely to purchase high-end items might receive different marketing emails than those in the cluster least likely to buy high-end items and those in a cluster of customers who purchase only sale items.

Common use cases: customer segmentation, product grouping/upselling, campaign optimization

Principal component analysis (PCA)

The primary purpose of PCA is to reduce the number of variables within a data set without degrading model reliability. It does this by creating new variables — principal components — that incorporate the relevant information of multiple existing variables. In and of itself, it won’t predict outcomes, but by minimizing the number of variables a predictive model has to analyze, it facilitates faster, more efficient outputs from the predictive model.

Common use cases: feature selection/refinement, enhanced customer segmentation, ad performance analysis

Turn your team into a predictive powerhouse with Pecan AI

Overwhelmed by the myriad ML models out there? You’re not alone. That’s why Pecan has a robust library of models to help with any of the above use cases — and many, many more.

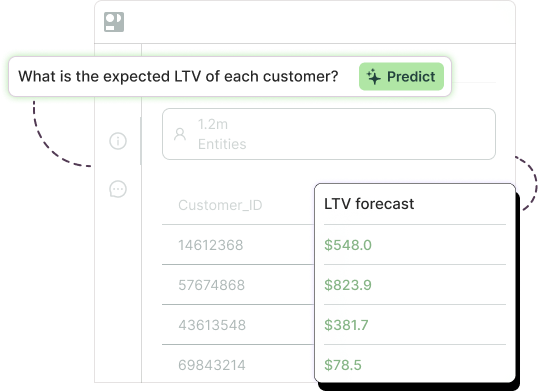

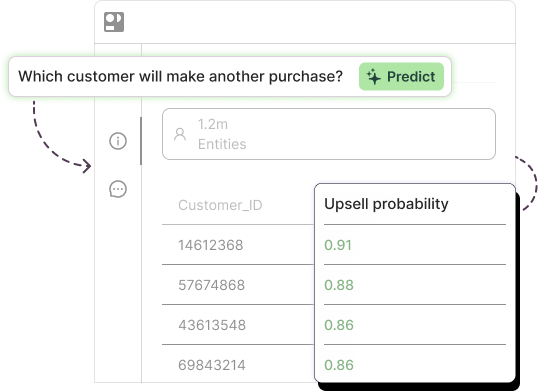

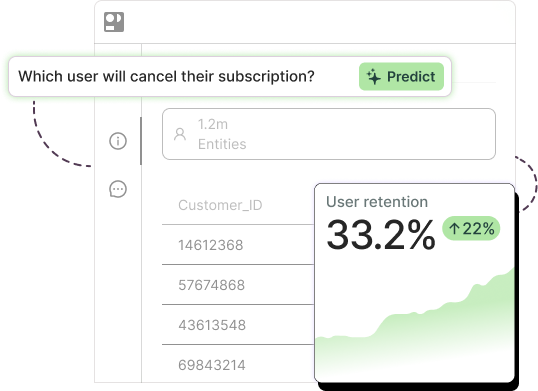

Peca is a low-code predictive GenAI platform. You can use our automated features and genAI assistant to isolate a business question, choose the optimal model, select the right features, and build a SQL model in minutes.

With Pecan, you don’t need an army of data scientists: Our platform gives users of various skill levels the power to rapidly experiment, test, and build the best and most accurate models for your marketing use case.